1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

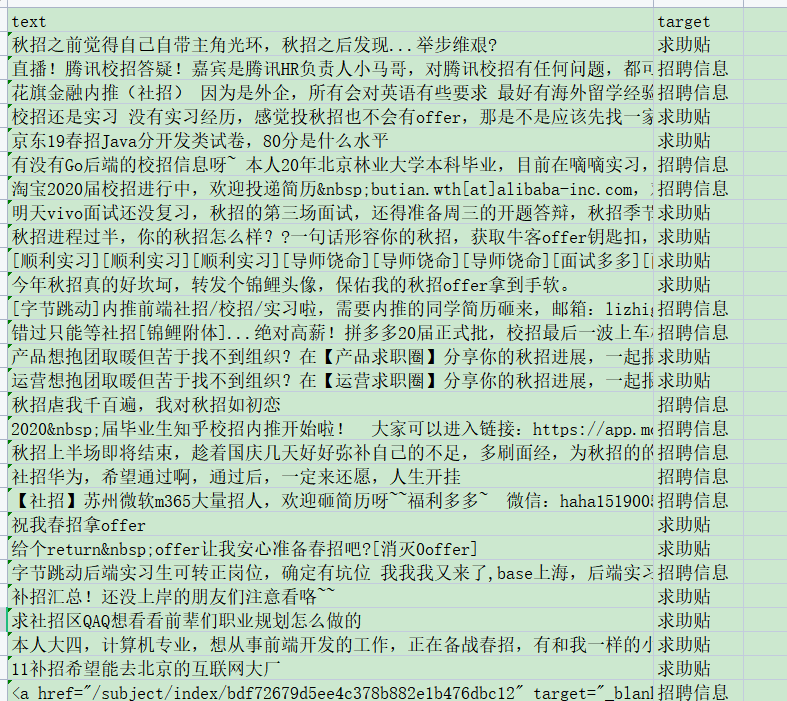

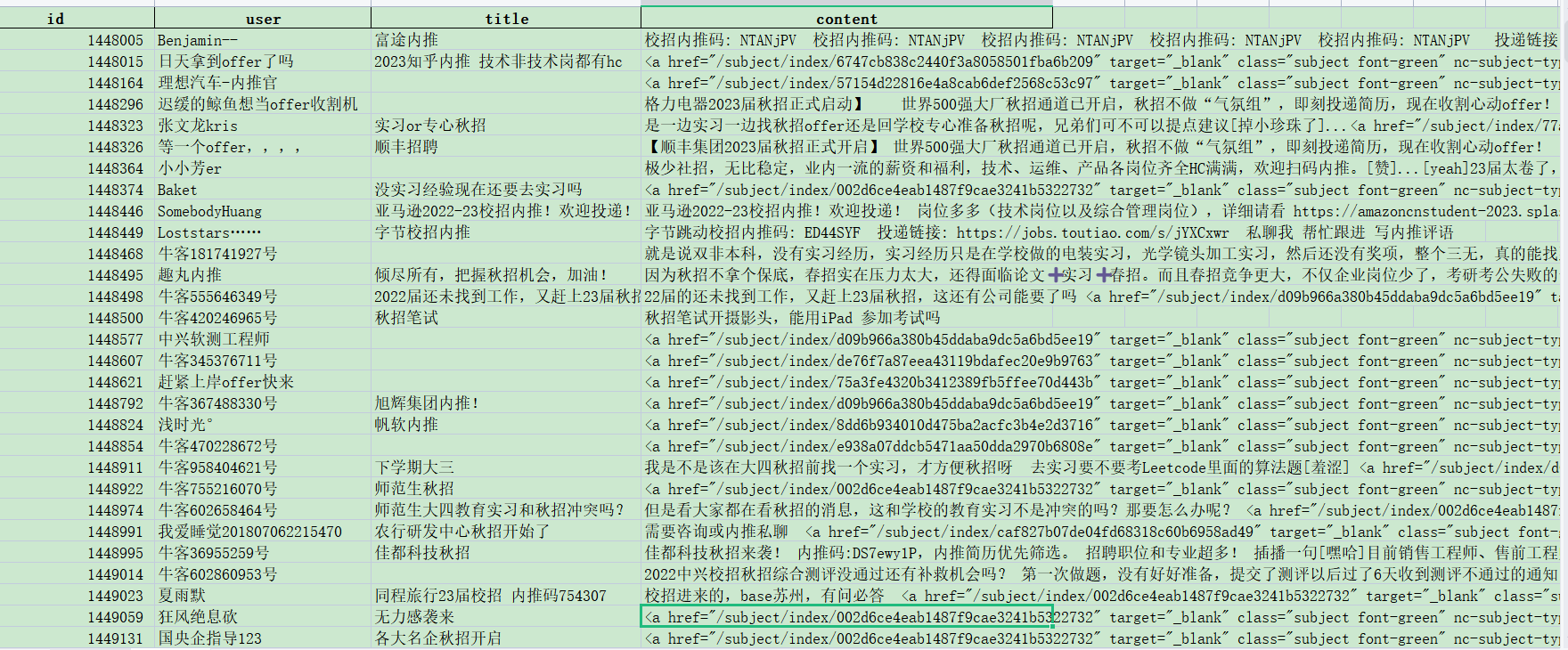

| import pandas as pd

import numpy as np

from datasets import load_dataset

from transformers import AutoTokenizer, AutoModelForMaskedLM, AutoModelForSequenceClassification

from transformers import TrainingArguments, Trainer

from sklearn.metrics import f1_score, roc_auc_score, accuracy_score, classification_report

from transformers import EvalPrediction

import evaluate

metric = evaluate.load("seqeval")

model_name = "uer/chinese_roberta_L-4_H-512"

tokenizer = AutoTokenizer.from_pretrained(model_name)

max_input_length = 128

label2id = {

'招聘信息':0,

'经验贴':1,

'求助贴':2

}

id2label = {v:k for k,v in label2id.items()}

def preprocess_function(examples):

model_inputs = tokenizer(examples["text"], max_length=max_input_length, truncation=True)

labels = [label2id[x] for x in examples['target']]

model_inputs["labels"] = labels

return model_inputs

raw_datasets = load_dataset('csv', data_files={'train': 'train.csv', 'test': 'test.csv'})

tokenized_datasets = raw_datasets.map(preprocess_function, batched=True, remove_columns=raw_datasets['train'].column_names)

def multi_label_metrics(predictions, labels, threshold=0.5):

probs = np.argmax( predictions, -1)

y_true = labels

f1_micro_average = f1_score(y_true=y_true, y_pred=probs, average='micro')

accuracy = accuracy_score(y_true, probs)

print(classification_report([id2label[x] for x in y_true], [id2label[x] for x in probs]))

metrics = {'f1': f1_micro_average,

'accuracy': accuracy}

return metrics

def compute_metrics(p: EvalPrediction):

preds = p.predictions[0] if isinstance(p.predictions, tuple) else p.predictions

result = multi_label_metrics(predictions=preds, labels=p.label_ids)

return result

model = AutoModelForSequenceClassification.from_pretrained(model_name,

num_labels=3,

)

batch_size = 64

metric_name = "f1"

training_args = TrainingArguments(

f"/root/autodl-tmp/run",

evaluation_strategy = "epoch",

save_strategy = "epoch",

learning_rate=2e-4,

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

num_train_epochs=10,

save_total_limit=1,

weight_decay=0.01,

load_best_model_at_end=True,

metric_for_best_model=metric_name,

fp16=True,

)

trainer = Trainer(

model,

training_args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["test"],

tokenizer=tokenizer,

compute_metrics=compute_metrics

)

trainer.train()

print("test")

print(trainer.evaluate())

trainer.save_model("bert")

predictions, labels, _ = trainer.predict(tokenized_datasets["test"])

predictions = np.argmax(predictions, axis=-1)

print(predictions)

print(labels)

|